Author: admin

[Video] Exposing Trump, Musk, and the Other Techno-Fascists: My Interview with Blast

For years, we’ve been bombarded with the idea that artificial intelligence is the answer to all our problems. A revolution,… read more [Video] Exposing Trump, Musk, and the Other Techno-Fascists: My Interview with Blast

![[Video] My Conversation With Moira Weigel at Harvard’s Berkman Klein Center](https://www.casilli.fr/wp-content/uploads/2025/02/Harvard-logo-design-480x214.jpg)

[Video] My Conversation With Moira Weigel at Harvard’s Berkman Klein Center

During my talk at the University of Harvard, I presented my new book Waiting for Robots: The Hired Hands of… read more [Video] My Conversation With Moira Weigel at Harvard’s Berkman Klein Center

Review of My Book “Waiting for Robots” in the MIT Technology Review

MIT Technology Review has highlighted my book Waiting for Robots: The Hired Hands of Automation as one of the essential… read more Review of My Book “Waiting for Robots” in the MIT Technology Review

Selected as ‘Nature’ Journal Top Science Pick: Another Major Honor for my New Book

Following its glowing review in the journal Science last month, Waiting for Robots: The Hidden Hands of Automation (University of… read more Selected as ‘Nature’ Journal Top Science Pick: Another Major Honor for my New Book

[Podcast] New Books Network Podcast: My Interview on “Waiting for Robots” Now Live!

I’m excited to announce that my book talk on Waiting for Robots: The Hidden Hands of Automation is now available… read more [Podcast] New Books Network Podcast: My Interview on “Waiting for Robots” Now Live!

Top Journal Science Reviews My New Book: “A Must-Read on AI, Labor, and Automation”

Exciting news! Science has just published an excellent review of my book, Waiting for Robots: The Hidden Hands of Automation… read more Top Journal Science Reviews My New Book: “A Must-Read on AI, Labor, and Automation”

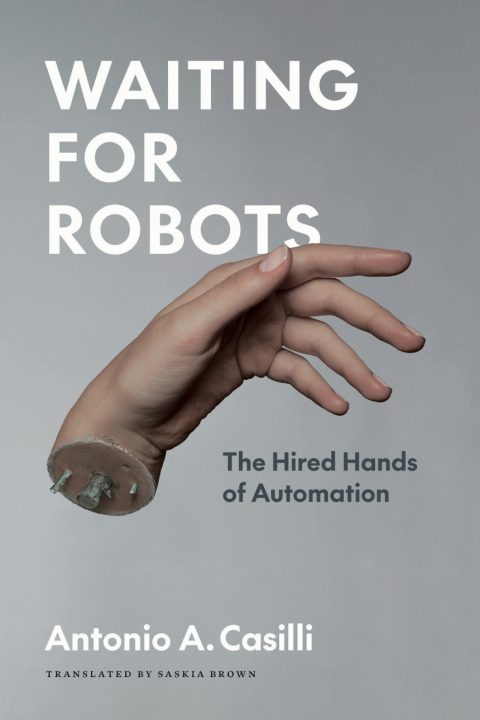

My New Book ‘Waiting for Robots’ Is Out Now from University of Chicago Press: How AI Companies Extract Value From Every Human on Earth

I am excited to announce the publication of my new book Waiting for Robots: The Hidden Hands of Automation (University of Chicago Press, 2025) on January 8th. Read more about media coverage and praise for it.

AI’s Labor Paradox Exposed: My Research in Treccani Encyclopedia and MicroMega Journal

I was honored to author the “Digital Labor” (Lavoro Digitale) entry for the Enciclopedia Italiana Treccani, a prestigious European academic… read more AI’s Labor Paradox Exposed: My Research in Treccani Encyclopedia and MicroMega Journal

AI Workers Go To Brussels: Paving the Way for an Unprecedented Dialogue with the European Parliament

On November 21st, 2024, I’ll join forces with my colleague Milagros Miceli and MEP Leïla Chaibi (The Left) at the… read more AI Workers Go To Brussels: Paving the Way for an Unprecedented Dialogue with the European Parliament

Watch The Trailer Of Our New Documentary Exposing The True Price Of Artificial Intelligence

Explore the human and environmental costs of AI in our upcoming documentary, along with details on the workshop and open conversation at Cambridge.

![[Video] Exposing Trump, Musk, and the Other Techno-Fascists: My Interview with Blast](https://www.casilli.fr/wp-content/uploads/2025/03/blast1-480x274.png)

![[Podcast] New Books Network Podcast: My Interview on “Waiting for Robots” Now Live!](https://www.casilli.fr/wp-content/uploads/2025/02/NBN-logo-480x320.jpg)