The recent ban of ChatGPT by the Italian data authority, Garante della privacy, has caused quite a stir in the tech community. In the wake of a “privacy incident” caused by a bug that displayed other users’ chat history, on March 31, 2023, the authority announced that it was banning the AI language model in Italy due to non-compliance with GDPR regulations. However, the service remained accessible to Italian users. Until, later that day, OpenAI decided to do something about it…

In the evening, Sam Altman, the CEO of OpenAI, which created ChatGPT, announced that the service would no longer be available in Italy. Altman stated that the decision was made out of deference to the Italian government and its privacy laws.

This tweet contains some inaccuracies. The Italian “government” has nothing to do with this story. Garante delle privacy is an independent data protection authority. Moreover, OpenAI made this decision on its own, and not because the European authority seized their servers or data centers. The Italian privacy regulator lacks the technological capabilities to shut down the service.

The Garante just ordered “a provisional limitation of the processing of personal data” of persons residing on the Italian territory (regardless of citizenship or residence), effective immediately. A 20-day period was allowed for OpenAI to inform the authority of its progress toward compliance.

The company still had time to address any issues. The decision to discontinue the service appears to be a corporate one, presumably to elicit sympathy from the public and force the data authority to reconsider its decision. An odd move for a company that in its GPT-4 Technical Report earlier this month emphasized the need for “effective regulation.”

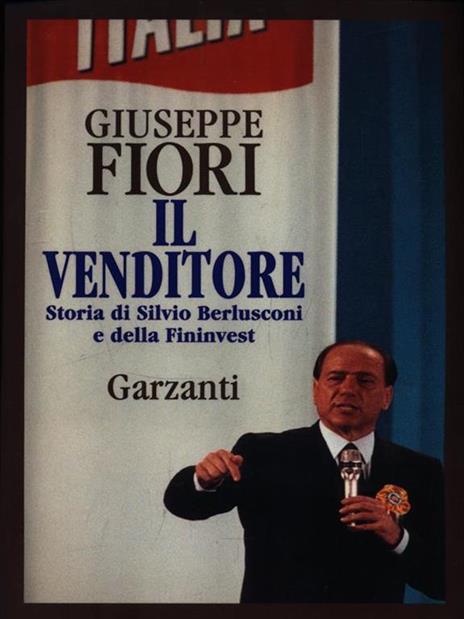

gpt-4-2It’s hard not to see this as a ruse reminiscent of old-time television tycoons trying to rally the audience against law enforcement. There is an obvious parallel here with a very Italian story from 1984, when Silvio Berlusconi was illegally broadcasting three national TV networks through a web of small local stations. When judges in a few Italian cities intervened, Berlusconi could have complied with the law by broadcasting only locally. Instead, he decided to shut down his networks. Next thing you know, people were out in the streets, chanting the names of the suspended channels. Ad-hoc legislation was passed in a rush by the then prime minister. Despite the opposition of left-wing parties, three “decreti Berlusconi” (Belusconi bills) sanctioned the tycoon’s system.

The historical example serves as a warning of what could happen if policymakers succumb to such blackmailing tactics. Altman’s decision to discontinue ChatGPT in Italy is not an isolated incident. If other companies follow suit, it could set a dangerous precedent. It’s essential that tech companies comply with privacy laws and regulations, but it’s equally important that they don’t use their power to manipulate public opinion or bully regulators.

Acknowledgments: My thanks go out to Italian lawyers Giovanni B. Gallus and Carlo Blengino, whose insightful comments helped me revise and correct some inaccuracies in the first draft.