It appears that we have all become the targets of a propaganda campaign lately. Not war criminal governments’ interference, nor far-right parties’ agitprop—though there is plenty of that. I’m talking about corporate AI anti-labor propaganda. If you live in the Bay Area, your streets are filled with this crap.

The most egregious example is a Wired article questioning whether AI should have the right to strike and join unions. “In Silicon Valley,” writes the author, “there’s a small but growing field called model welfare, which is working to figure out whether AI models are conscious and deserving of moral considerations, such as legal rights.” On its face, it’s just another provocation by journalists with no loyalty except to their Big Tech overlords. In practice, it’s a polished piece of disinformation.

The people mentioned in this piece, raving about “model welfare” and unions for AI, are paid by those who have spent years crushing labor welfare and denying rights to actual workers trying to unionize. Model welfare is a red herring. Aiming to grant rights to AI goes hand in hand with spending millions to hide the ugly side of the AI supply chain by crushing unions in the US and abroad, subcontracting misery, traumatizing moderators who filter toxic content for pennies. Millions of human data workers train and maintain these systems while companies privatize gains and socialize harms. If the Valley truly cared about welfare, it would start with human workers — living wages, collective bargaining, safety, accountability.

As a researcher with the DiPLab program and a coordinator of INDL (the International Network on Digital Labor), I have engaged with hundreds of AI workers and organizers in Africa and Latin America. Over the years, they have seen how Big Tech companies obstructed workers’ rights. Lawyers and police harassed us while we shot our TV documentary “In the Belly of AI” in Kenya—to prevent us from even speaking to union leaders.

Now suddenly the machine should have rights. AI companies refuse to respect the rights of those who produce but want to grant rights to the products. It’s as if Nike were suggesting that we should overlook the workers in sweatshops and instead focus on the rights of sneakers.

That’s the play: shift focus from real material conditions of exploitation, and denied rights, to a fake philosophy that glorifies the product while shielding corporations from responsibility. The “field of research” of model welfare isn’t being built by disinterested scholars. Its architects sit in the same orbit as major AI firms.

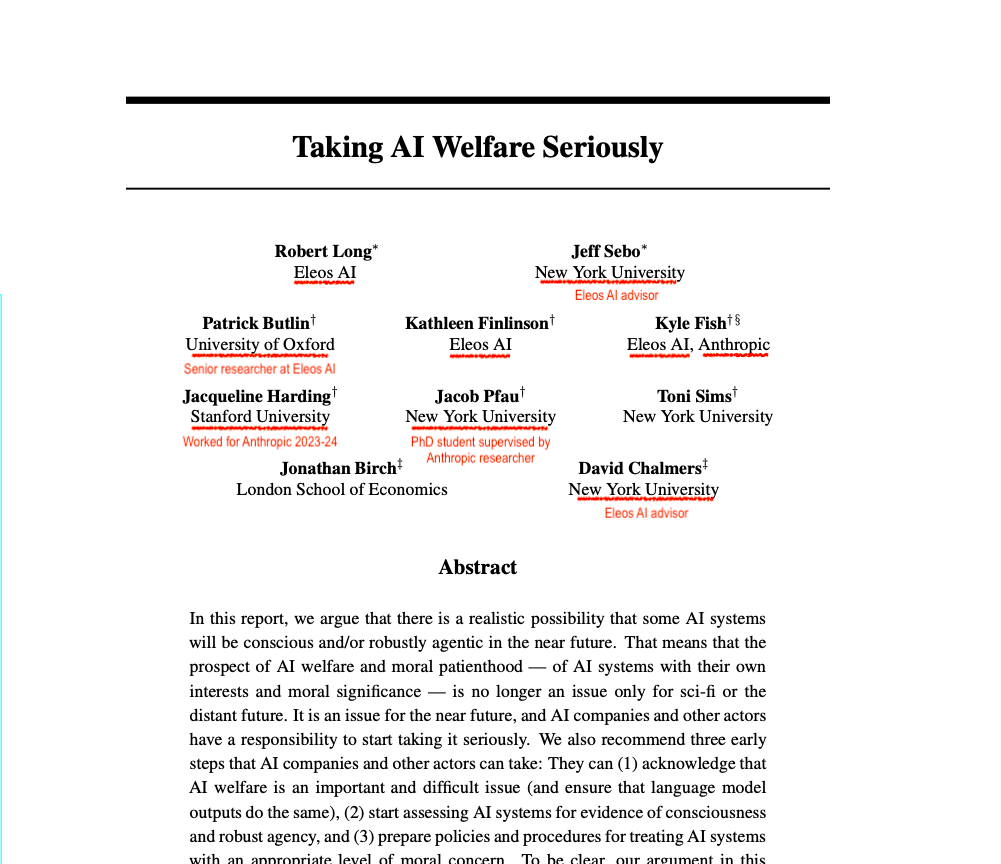

I first encountered this issue last year, while discussing a paper titled “Taking AI Welfare Seriously” with colleagues at the Minderoo Centre for Technology and Democracy at Cambridge. Although authors appeared to be affiliated with prestigious universities, a closer look revealed that the majority were connected to a nonprofit called Eleos AI. Much like OpenAI, this is a “Trojan horse nonprofit” with a sizeable for-profit branch, and a commercial agenda. Notably, Eleos AI has strong ties to Anthropic, and the lead author of the paper now heads Claude’s AI welfare research program.

The paper repackages model welfare as an ethical consideration for nonhuman beings. They pompously describe it as “assessing the welfare and moral patienthood of nonhumans, including other animals and AI systems”, but in the end, it’s a sophism: if you don’t treat LLMs as living, feeling beings, then you’re no better than those who harm animals. It’s clever, distracting, and ultimately dangerous.

AI Ethics is rife with this kind of corporate influence. As Meredith Whittaker argued in a 2021 essay, industry actors use ethical AI to “coopt and neutralise critique”. This is done in part by funding the “weakest critics, often institutions and coalitions that focus on so-called AI ethics, and frame issues of tech power and dominance as abstract governance questions.”

Model welfare, too, is a clearinghouse for corporate money and a shield against regulation—especially labor regulation. Critics are urged to prioritize the welfare of AI as a nonhuman entity, while companies blatantly disregard the very humans who bring AI into existence. Debating model sentience while ignoring the living, breathing people who make these systems run is rank hypocrisy. It’s a PR smoke screen. It’s a rhetorical sleight of hand. It’s the pot-maker calling the kettle a person.